Maximilian Dreyer

PhD candidate @Fraunhofer HHI in Berlin

Explainable AI, Interpretability, AI Safety

recent

featured work

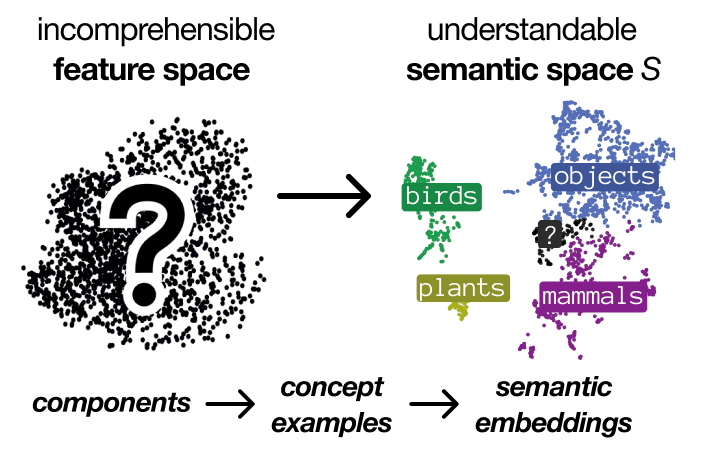

SemanticLens

SemanticLens is a universal explanation method for large AI models that provides systematic understanding and validation of hidden components, which is essential for ensuring security and transparency in safety-critical applications. By mapping the encoded knowledge of components into a semantically structured space, SemanticLens enables users to comprehensively search, describe, compare and audit a model’s inner workings.

More

January 2025

news

- Nov. 2024 // Talk about Concept-based Model Monitoring and Debugging during the KAIST XAI Tutorial Series.

- Oct. 2024 // Talk about Pruning with XAI during the All Hands Meeting of the German AI Community.

- May 2024 // Talk about Concept-level Model Explanations at Visual Intelligence workshop on concept-based XAI.

- April 2024 // PCX and PURE accepted at CVPR-24 workshops.

- Feb. 2024 // Talk about Debugging Biases of Deep Medical Models for Stanford MedAI.

work

Mechanistic understanding and validation of large AI models with SemanticLens

by Maximilian Dreyer, Jim Berend, Tobias Labarta, Johanna Vielhaben, Thomas Wiegand, Sebastian Lapuschkin, Wojciech Samek

SemanticLens is a universal explanation method for large AI models that provides systematic understanding and validation of hidden components.

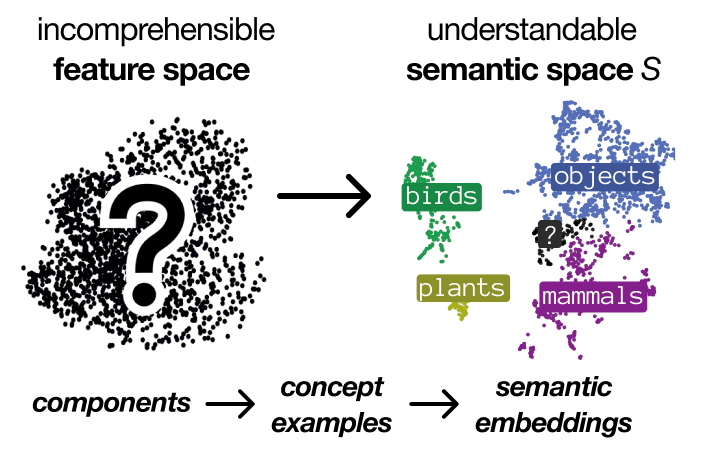

Pruning By Explaining Revisited: Optimizing Attribution Methods to Prune CNNs and Transformers

by Sayed M. V. Hatefi, Maximilian Dreyer, Reduan Achtibat, Thomas Wiegand, Wojciech Samek, Sebastian Lapuschkin

We show with PXP that (optimized) attribution methods are very effective for pruning CNN and transformer models.

published:

ECCV 2024 Workshops

PURE: Turning Polysemantic Neurons Into Pure Features

by Maximilian Dreyer, Erblina Purelku, Johanna Vielhaben, Wojciech Samek, Sebastian Lapuschkin

PURE allows to purify representations by turning polysemantic units into monosemantic ones, and thus increases latent interpretability.

published:

CVPRW 2024

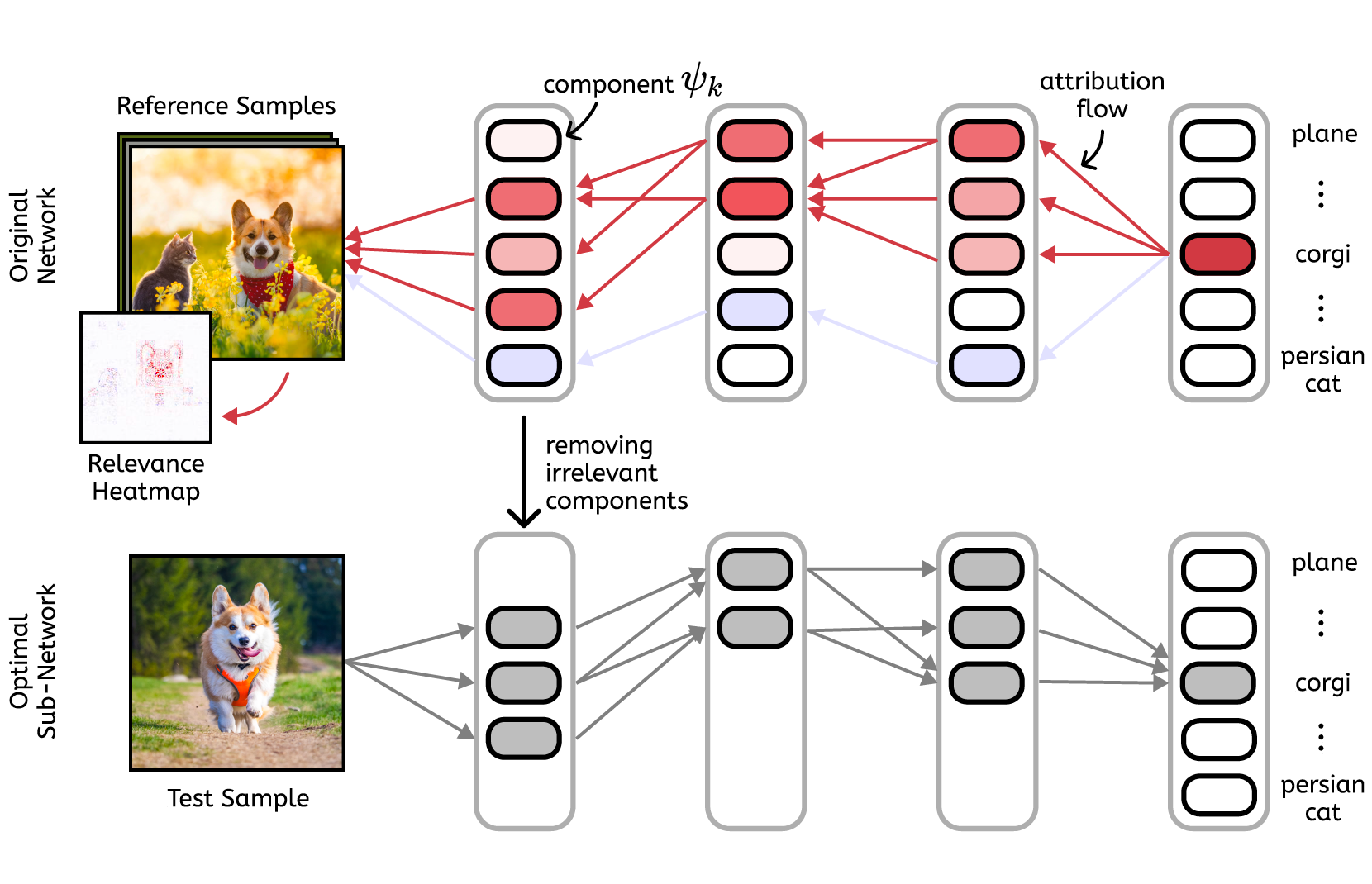

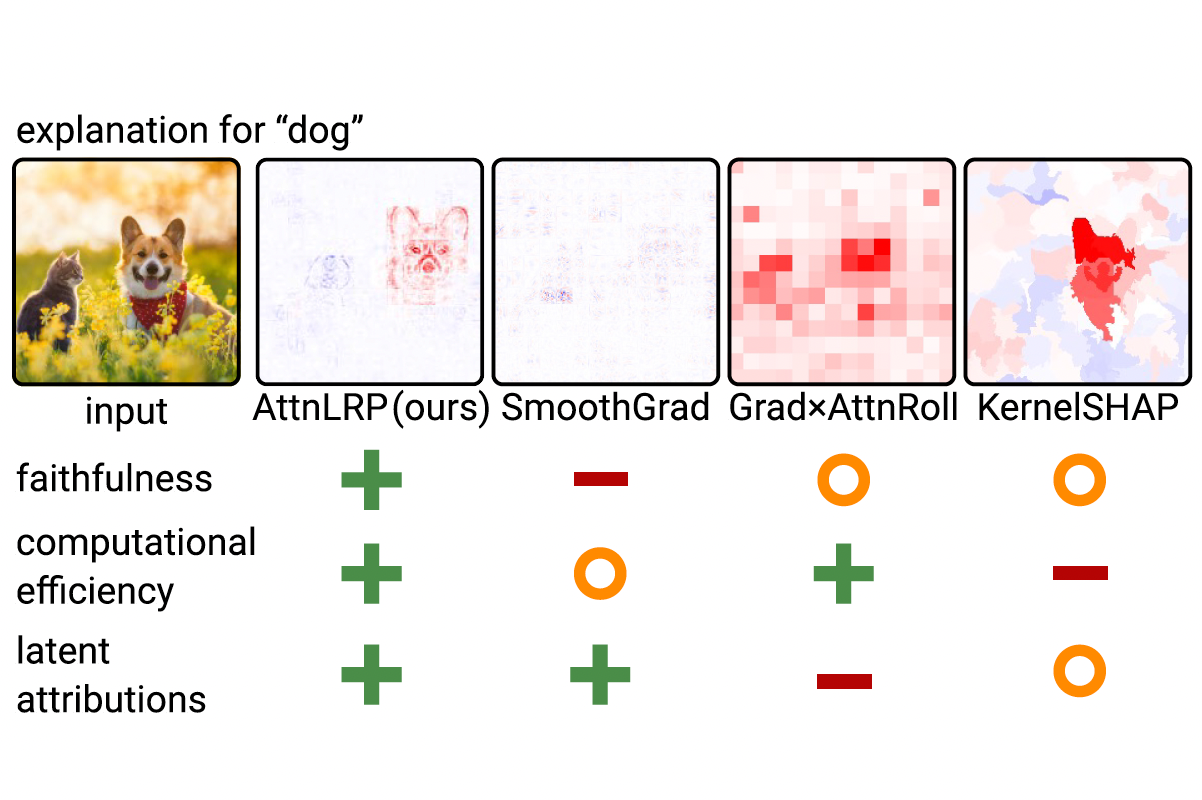

AttnLRP: Attention-Aware Layer-Wise Relevance Propagation for Transformers

by Reduan Achtibat, Sayed M. V. Hatefi, Maximilian Dreyer, Aakriti Jain, Thomas Wiegand, Sebastian Lapuschkin, Wojciech Samek

We extend LRP to handle attention layers in Large Language Models (LLMs) and Vision Transformers (ViTs).

published:

ICML 2024

Understanding the (Extra-)Ordinary: Validating DNN Decisions with Prototypical Concept-based Explanations

by Maximilian Dreyer, Reduan Achtibat, Wojciech Samek, Sebastian Lapuschkin

Prototypes enable an understanding of model (sub-)strategies and further allow to validate model predictions.

published:

CVPRW 2024

From Hope to Safety: Unlearning Biases of Deep Models via Gradient Penalization in Latent Space

by Maximilian Dreyer*, Frederik Pahde*, Christopher J. Anders, Wojciech Samek, Sebastian Lapuschkin

Introducing a novel method that enforces the unlearning of spurious concepts found in AI models.

published:

AAAI 2024

Reveal to Revise: An Explainable AI Life Cycle for Iterative Bias Correction of Deep Models

by Frederik Pahde*, Maximilian Dreyer*, Wojciech Samek, Sebastian Lapuschkin

Introducing Reveal to Revise (R2R), an Explainable AI life cycle to identify and correct model bias.

published:

MICCAI 2023

Revealing Hidden Context Bias of Localization Models

by Maximilian Dreyer, Reduan Achtibat, Thomas Wiegand, Wojciech Samek, Sebastian Lapuschkin

By extending CRP to localization tasks, we are able to precisely identify background bias concepts used by AI models.

published:

CVPRW 2023

From attribution maps to human-understandable explanations through Concept Relevance Propagation

by Reduan Achtibat*, Maximilian Dreyer*, Ilona Eisenbraun, Sebastian Bosse, Thomas Wiegand, Wojciech Samek, Sebastian Lapuschkin

Introducing Concept Relevance Propagation (CRP) as a local and global XAI method to understand the hidden concepts used by AI models.

published:

Nature Machine Intelligence

Explainability-Driven Quantization for Low-Bit and Sparse DNNs

by Daniel Becking, Maximilian Dreyer, Wojciech Samek, Karsten Müller, Sebastian Lapuschkin

XAI-adjusted quantization to generate sparse neural networks while maintaining or even improving model performance.

published:

xxAI - Beyond Explainable AI

Paper

September 2021

cv

Education

Master of Science: Computational Science

2020 - 2022

University of Potsdam, Potsdam

Advanced studies of computational science with focus in statistical data analysis and artificial intelligence.

Bachelor of Science: Physics

2015 - 2019

Humboldt-University, Berlin

Basic studies of physics.

2018

Uppsala-University, Uppsala

Studies in Uppsala for one semester as a part of the Erasmus exchange programme.

Working Experience

Research Associate / PhD Student

since July 2022

Fraunhofer HHI, Berlin

Research Associate in the Machine Learning Group for Explainable AI (XAI). Advancement and application of concept-based XAI methods, thereby also touching AI robustness.

Research Assistant

November 2020 - July 2022

Fraunhofer HHI, Berlin

Research Assistant in the Machine Learning Group for Explainable AI (XAI). Application and adaption of XAI methods to segmentation and object detection models. Application of XAI to make Deep Neural Networks more efficient by quantization. Development of concept-based explainability methods.

Working Student

June 2019 - November 2020

IAV, Berlin

Tool development, modeling of car motion/behaviour, simulations to analyze safety borders for driving functions. Visualization and interpretation of high-dimensional experimental results.

Working Student, Bachelor Thesis

November 2018 - June 2019

Baumer Hübner, Berlin

General research in magnetic pole rings and magnetization processes. Planing and doing measurements and analyzing the data. Analyzing the magnetic behavior of magnetic pole rings with small diameter. Comparing an analytical model, FEM simulation and experimental measurements.

Working Student

July 2016 - September 2018

DESY, Zeuthen

Supervising school classes visiting the vacuum lab of DESY in Zeuthen. Part of organization team for TeVPA 2018 conference.

contact

Welcome to my homepage!

I live in Berlin and work at Fraunhofer HHI to increase AI transparency. Analyzing and using data to create helpful and interesting results is what made me fall in love with data analytics and programming. Next to my work and studies in these areas, I like to dive deeper into web development.Please feel free to contact me via maximilian.dreyer[@]hhi.fraunhofer.de or on LinkedIn.